-

Telecommunications Giant Sets the Stage for Scalable, Resilient, and Secure Software Development with JFrog

Learn how this leading multinational telecommunications company transformed its entire development platform, and achieved scalability and optimal uptime by moving to the JFrog Software Supply Chain Platform in the cloud.

-

AWS VPN: Types, Benefits, and Troubleshooting Tips

Unlock seamless, secure cloud connectivity! Explore AWS VPN types (Site-to-Site, Client VPN), their benefits, and essential setup tips. Learn how to troubleshoot common issues and discover how tools like Netmaker offer robust alternatives for building scalable, resilient networks.

-

Where Large Language Models (LLMs) meet Infrastructure Identity

As Large Language Models (LLMs) begin interfacing directly with real infrastructure, securing their access becomes critical. This article explores how the Model Context Protocol (MCP) enables LLMs to interact with databases and systems—and how Teleport’s Infrastructure Identity Platform ensures secure, auditable access. Learn how teams can enforce least-privilege policies, prevent over-permissioning, and maintain full audit trails even with AI in the loop.

-

Celebrating a Decade of GitKraken

Join GitKraken's VP of Marketing, Sara Stamas, on a nostalgic journey celebrating 10 years of innovation! From a 30-day project to a global DevEx powerhouse, discover how GitKraken revolutionized Git, embraced community, and evolved with acquisitions, AI, and a new DevEx Platform, all while staying true to its playful, tenacious spirit.

-

Why Bad Workflows Are Silently Killing Your Velocity (and How to Fix It)

Is invisible workflow inconsistency killing your team's velocity? Discover how undefined PR standards, chaotic branching, constant context switching, and poor onboarding silently derail productivity. Learn practical fixes and how to leverage AI to enhance—not replace—structured workflows, unlocking true speed and developer flow.

-

GitKraken Desktop 11.2: Merge Conflicts, Meet AI (and More Dev-Quality-of-Life Wins)

Tired of merge conflict headaches? Discover GitKraken Desktop 11.2, where AI-powered assistance simplifies conflict resolution with context-aware suggestions and confidence levels. Plus, enjoy new quality-of-life wins like hunk-level reverts, enhanced collaboration features, and CLI support for AI agents, supercharging your Git workflow!

-

Grafana 12 release: observability as code, dynamic dashboards, new Grafana Alerting tools, and more

Grafana 12 is here, revolutionizing observability! Discover powerful new tools like "observability as code" with Git Sync, dynamic dashboards with tabs and conditional logic, enhanced alerting, and lightning-fast tables. Level up your monitoring, streamline workflows, and make Grafana truly yours.

-

Adaptive alerting: faster, better insights with the new metrics forecasting UI in Grafana Cloud

Ditch reactive alerting! Discover how Grafana Cloud's new metrics forecasting UI enables proactive, adaptive alerting, letting you fine-tune models in real-time, anticipate problems before they strike, and automatically account for seasonality and holidays—all now available for free!

-

Prometheus data source update: Redefining our big tent philosophy

Grafana is redefining its "big tent" philosophy! Discover how purpose-built data sources, like the new plugins for Amazon Managed Service for Prometheus and Azure Monitor Managed Service for Prometheus, enhance interoperability and cater to specific cloud use cases, empowering users to centralize observability without sacrificing vendor neutrality or functionality.

-

GitOps Pros and Cons

Explore the role of GitOps in configuration management and continuous delivery within Kubernetes. Learn about its pros, such as automated deployment and hands-off operation, and its cons, including risks of automated failures.

-

The Challenges of Using Kubernetes at Scale

Kubernetes is powerful but notoriously complex—especially at scale. From rising cloud costs to configuration drift, delayed upgrades, and multi-location inconsistencies, enterprises face major infrastructure challenges. This article explores how Talos Linux and Omni tackle these issues head-on by simplifying Kubernetes management, enhancing security, reducing cloud dependency, and eliminating fragility with an immutable, API-first OS and centralized control interface.

-

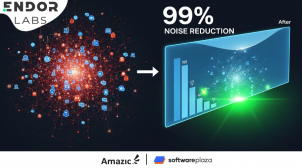

Grip Security Reduces Noise by 99%

Discover how Grip Security, a SaaS security vendor, achieved a staggering 99% noise reduction in SCA findings by replacing their old tool with Endor Labs. Learn how they built stronger customer trust, boosted developer efficiency, and gained accurate vulnerability insights without "dependency hell.

Filter & Sort